GAN(对抗生成网络:Generative Adversarial Networks)是一类无监督学习的神经网络模型。

在GAN中第一个网路叫做生成网络$G(Z)$,第二个网络叫做鉴别网络$D(X)$

$$

GAN实现:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

X = tf.placeholder(tf.float32, shape=[None , 784 ], name='X' )

D_W1 = tf.Variable(xavier_init([784 , 128 ]), name='D_W1' )

D_b1 = tf.Variable(tf.zeros(shape=[128 ]), name='D_b1' )

D_W2 = tf.Variable(xavier_init([128 , 1 ]), name='D_W2' )

D_b2 = tf.Variable(tf.zeros(shape=[1 ]), name='D_b2' )

theta_D = [D_W1, D_W2, D_b1, D_b2]

Z = tf.placeholder(tf.float32, shape=[None , 100 ], name='Z' )

G_W1 = tf.Variable(xavier_init([100 , 128 ]), name='G_W1' )

G_b1 = tf.Variable(tf.zeros(shape=[128 ]), name='G_b1' )

G_W2 = tf.Variable(xavier_init([128 , 784 ]), name='G_W2' )

G_b2 = tf.Variable(tf.zeros(shape=[784 ]), name='G_b2' )

theta_G = [G_W1, G_W2, G_b1, G_b2]

def generator (z) :

G_h1 = tf.nn.relu(tf.matmul(z, G_W1) + G_b1)

G_log_prob = tf.matmul(G_h1, G_W2) + G_b2

G_prob = tf.nn.sigmoid(G_log_prob)

return G_prob

def discriminator (x) :

D_h1 = tf.nn.relu(tf.matmul(x, D_W1) + D_b1)

D_logit = tf.matmul(D_h1, D_W2) + D_b2

D_prob = tf.nn.sigmoid(D_logit)

return D_prob, D_logit

代码中generator(z)输入100维的向量,并返回784维的向量,表示MNIST数据(28x28),z是$G(Z)$的先验。discriminator(x)输入MNIST图片并返回表示真实MNIST图片的可能性。

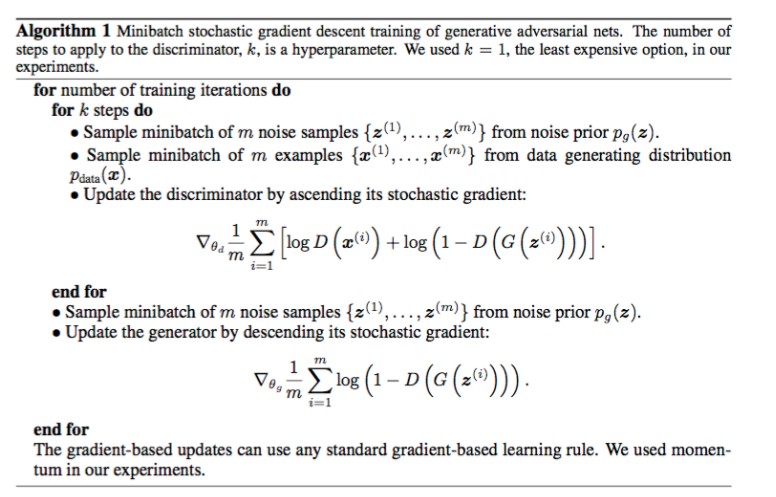

然后声明GAN的训练过程。论文中的训练算法如下:

1

2

3

4

5

6

G_sample = generator(Z)

D_real, D_logit_real = discriminator(X)

D_fake, D_logit_fake = discriminator(G_sample)

D_loss = -tf.reduce_mean(tf.log(D_real) + tf.log(1. - D_fake))

G_loss = -tf.reduce_mean(tf.log(D_fake))

损失函数加符号是因为公式来计算最大值,然而TensorFlow中优化器只能计算最小值。

然后根据上面的损失函数来训练对抗网络:1

2

3

4

5

6

7

8

9

10

11

12

13

14

D_solver = tf.train.AdamOptimizer().minimize(D_loss, var_list=theta_D)

G_solver = tf.train.AdamOptimizer().minimize(G_loss, var_list=theta_G)

def sample_Z (m, n) :

'''Uniform prior for G(Z)'''

return np.random.uniform(-1. , 1. , size=[m, n])

for it in range(1000000 ):

X_mb, _ = mnist.train.next_batch(mb_size)

_, D_loss_curr = sess.run([D_solver, D_loss], feed_dict={X: X_mb, Z: sample_Z(mb_size, Z_dim)})

_, G_loss_curr = sess.run([G_solver, G_loss], feed_dict={Z: sample_Z(mb_size, Z_dim)})

最后得到结果:

GAN training

参考资料: